SAP HANA Scale-out Architecture Demo

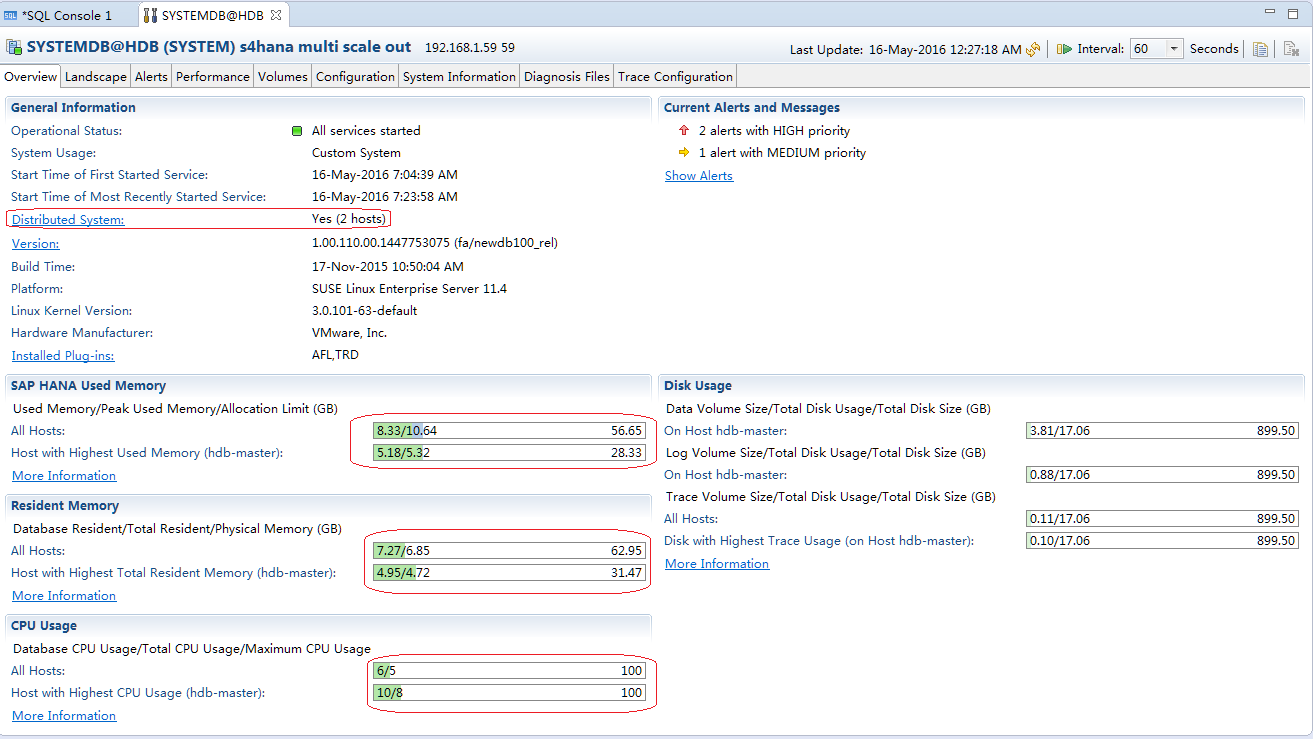

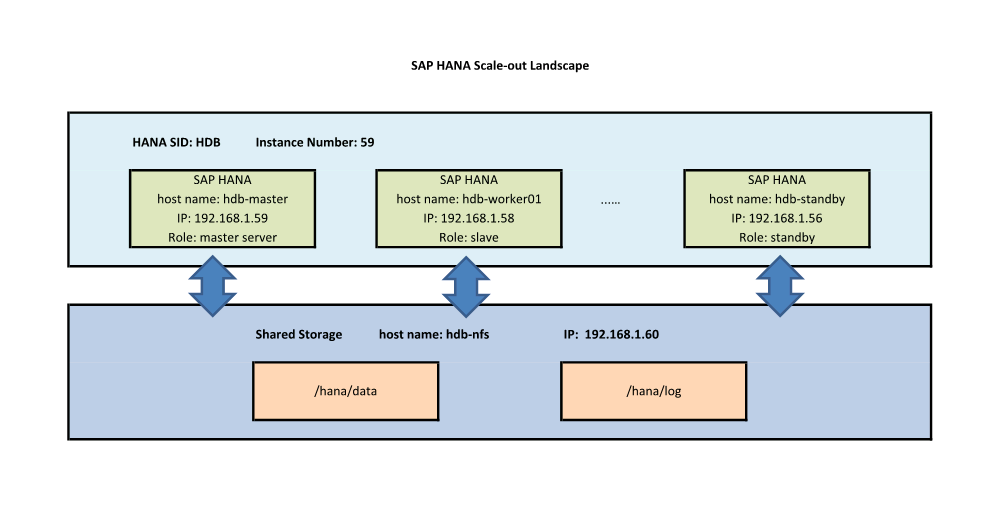

The smallest scale-out system at least needs two server nodes, if considering single node fail tolerant we can add one additional standby node.

There are three roles in multiple node architecture: Master, Slave and Standby server. They all can process request from client.

- Master and Slave server can store database and its partitions as well as can be calculation engine.

- Master server stores all metadata while the Slave server only caches whatever it needs.

- In a distribution environment can configure more than on Master, Slave or Standby server, but only one Master server is active.

- The number of Standby server decides the number of single node failover at one time.

This demo will show how to setup SAP HANA scale-out or Multiple Node landscape and how it works for High Availability.

In this environment, there are three SAP HANA server nodes and one shared storage system on which are all installed SLE 11 sp4 for SAP.

Step 1. setup NFS and export shared folder

login as root to first server to setup this server as shared storage system.

hostname: hdb-nfs

IP address: 192.168.1.60

setup new partition/volume and mount as /hana

edit /etc/exports to add entries

start NFS server

Directory: /root/Desktop

Mon May 16 04:12:01 UTC 2016

hdb-nfs:~/Desktop # mkdir /hana

hdb-nfs:~/Desktop # vi /etc/exports

/hana 192.168.1.59(rw,no_root_squash)

/hana 192.168.1.58(rw,no_root_squash)

/hana 192.168.1.57(rw,no_root_squash)

/hana 192.168.1.56(rw,no_root_squash)

hdb-nfs:~/Desktop # /etc/init.d/nfsserver start

Starting kernel based NFS server:exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.1.59:/hana".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.1.58:/hana".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.1.57:/hana".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "192.168.1.56:/hana".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

mountd statd nfsd sm-notify done

hdb-nfs:~/Desktop # chkconfig nfsserver on

hdb-nfs:~/Desktop #

Step 2. install SAP HANA sps11

login as root into second server to install SAP HANA sps11.

host name: hdb-master

IP address: 192.168.1.59

mount hdb-nfs:/hana to local /hana

edit /etc/fstab to add entry

192.168.1.60:/hana /hana nfs rw,sync,hard,intr 0 0

install multiple tenant for future lab

hdb-master:/mnt/hgfs/INST/51050506/DATA_UNITS/HDB_SERVER_LINUX_X86_64 # ./hdbinst

SAP HANA Database installation kit detected.

SAP HANA Lifecycle Management - Database Installation 1.00.110.00.1447753075

****************************************************************************

Enter Local Host Name [hdb-master]:

Enter Installation Path [/hana/shared]:

Enter SAP HANA System ID: HDB

Enter Instance Number [00]: 59

Index | Database Mode | Description

-----------------------------------------------------------------------------------------------

1 | single_container | The system contains one database

2 | multiple_containers | The system contains one system database and 1..n tenant databases

Select Database Mode / Enter Index [1]: 2

Index | Database Isolation | Description

----------------------------------------------------------------------------

1 | low | All databases are owned by the sidadm user

2 | high | Each database has its own operating system user

Select Database Isolation / Enter Index [1]:

Index | System Usage | Description

-------------------------------------------------------------------------------

1 | production | System is used in a production environment

2 | test | System is used for testing, not production

3 | development | System is used for development, not production

4 | custom | System usage is neither production, test nor development

Select System Usage / Enter Index [4]:

Enter System Administrator (hdbadm) Password:

Confirm System Administrator (hdbadm) Password:

Enter System Administrator Home Directory [/usr/sap/HDB/home]:

Enter System Administrator User ID [1000]:

Enter System Administrator Login Shell [/bin/sh]:

Enter ID of User Group (sapsys) [79]:

Enter Location of Data Volumes [/hana/shared/HDB/global/hdb/data]: /hana/data

Enter Location of Log Volumes [/hana/shared/HDB/global/hdb/log]: /hana/log

Restrict maximum memory allocation? [n]:

Enter Database User (SYSTEM) Password:

Confirm Database User (SYSTEM) Password:

Restart system after machine reboot? [n]:

Summary before execution:

Installation Path: /hana/shared

SAP HANA System ID: HDB

Instance Number: 59

Database Mode: multiple_containers

Database Isolation: low

System Usage: custom

System Administrator Home Directory: /usr/sap/HDB/home

System Administrator Login Shell: /bin/sh

System Administrator User ID: 1000

ID of User Group (sapsys): 79

Location of Data Volumes: /hana/data

Location of Log Volumes: /hana/log

Local Host Name: hdb-master

Do you want to continue? (y/n): y

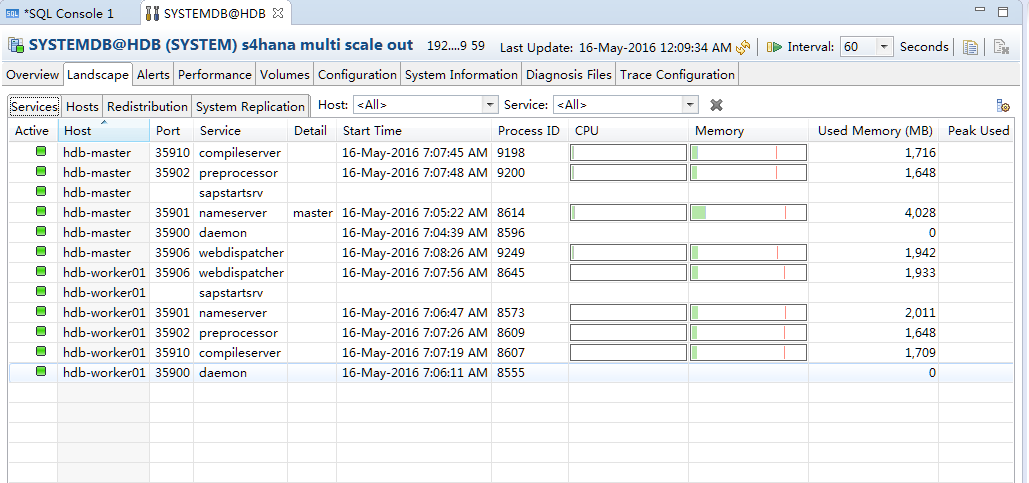

after instance starts, su - hdbadm or just use top -u hdbadm to confirm all services are running.

hdb-master:/mnt/hgfs/INST/51050506/DATA_UNITS/HDB_SERVER_LINUX_X86_64 # top -u hdbadm

top - 03:48:48 up 12:09, 2 users, load average: 0.31, 0.30, 0.35

Tasks: 200 total, 1 running, 199 sleeping, 0 stopped, 0 zombie

Cpu(s): 0.1%us, 0.3%sy, 0.0%ni, 99.7%id, 0.0%wa, 0.0%hi, 0.0%si, 0.0%st

Mem: 32229M total, 10101M used, 22128M free, 35M buffers

Swap: 16383M total, 0M used, 16383M free, 3549M cached

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

8951 hdbadm 20 0 7604m 5.1g 597m S 1 16.1 90:30.12 hdbnameserver

9117 hdbadm 20 0 2948m 573m 147m S 0 1.8 3:29.96 hdbcompileserve

9119 hdbadm 20 0 2808m 468m 141m S 0 1.5 3:15.47 hdbpreprocessor

9152 hdbadm 20 0 3113m 692m 144m S 0 2.1 2:55.68 hdbwebdispatche

8067 hdbadm 20 0 103m 27m 6104 S 0 0.1 0:09.10 sapstartsrv

8925 hdbadm 20 0 23116 1688 964 S 0 0.0 0:00.01 sapstart

8934 hdbadm 20 0 506m 286m 271m S 0 0.9 0:03.22 hdb.sapHDB_HDB5

16243 hdbadm 20 0 8944 1236 860 R 0 0.0 0:00.10 top

16132 hdbadm 20 0 13896 2792 1600 S 0 0.0 0:00.07 sh

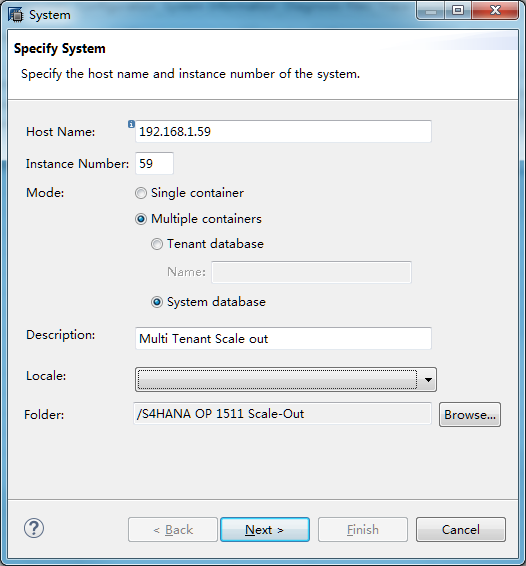

now can add system on SAP HANA Studio to connect this new SAP HANA system.

Perfect is not enough - must be irreplaceable!

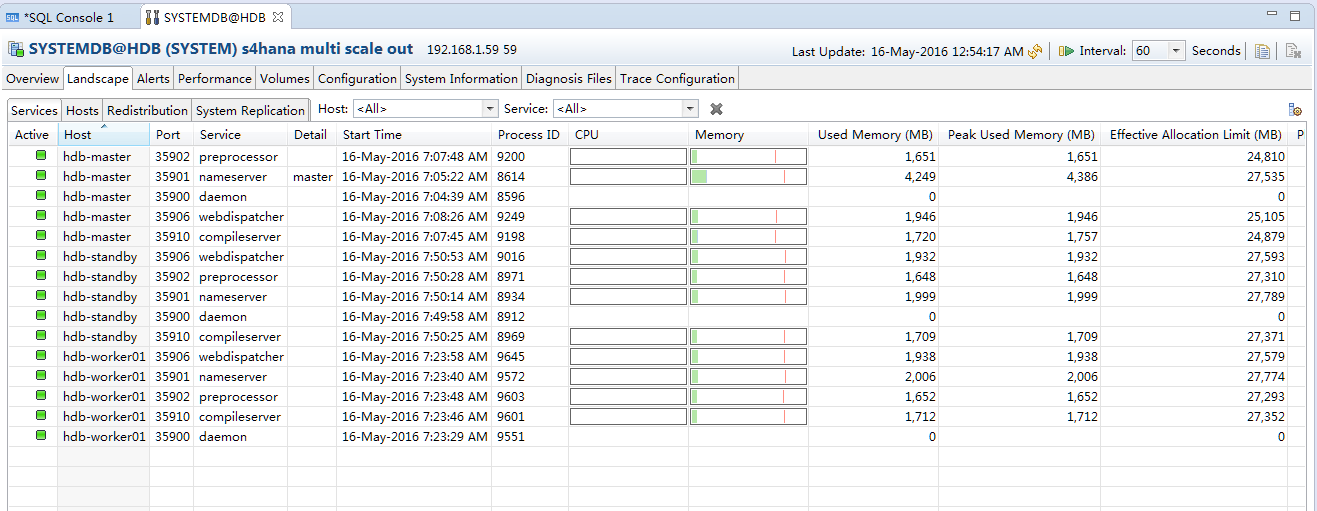

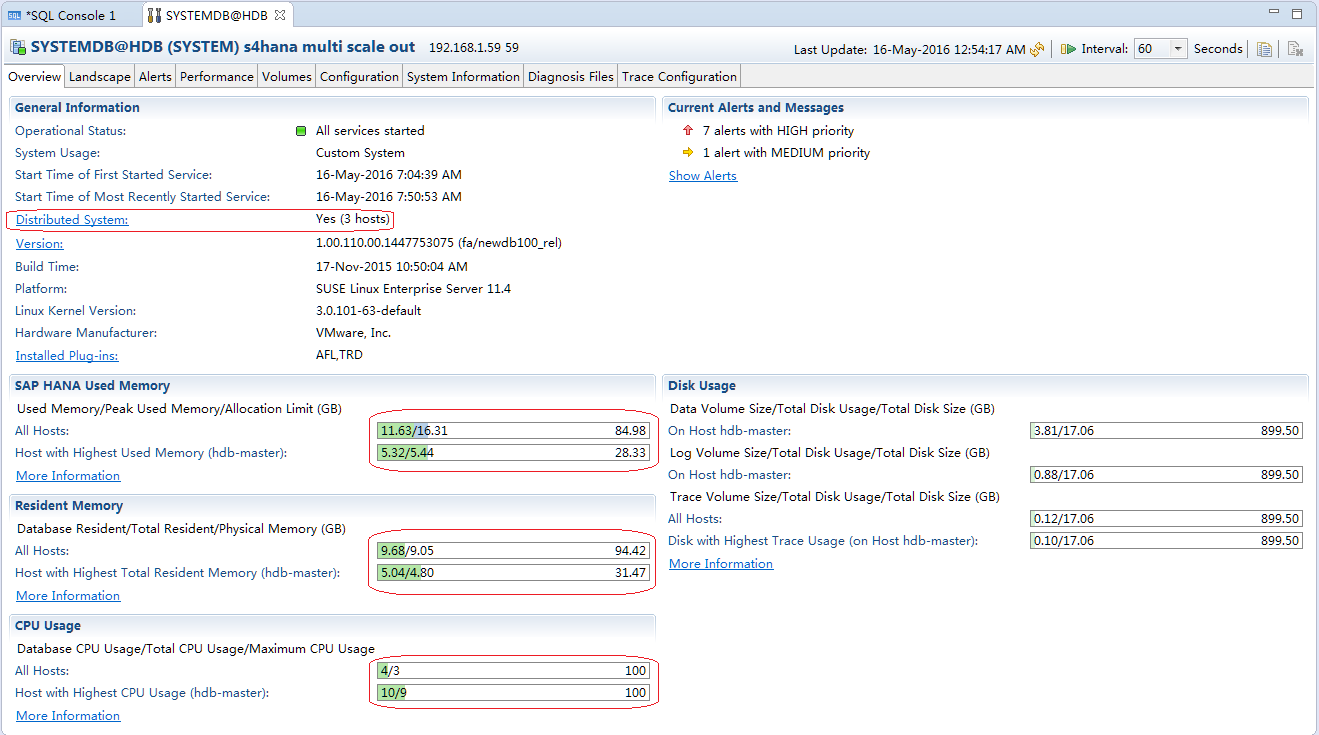

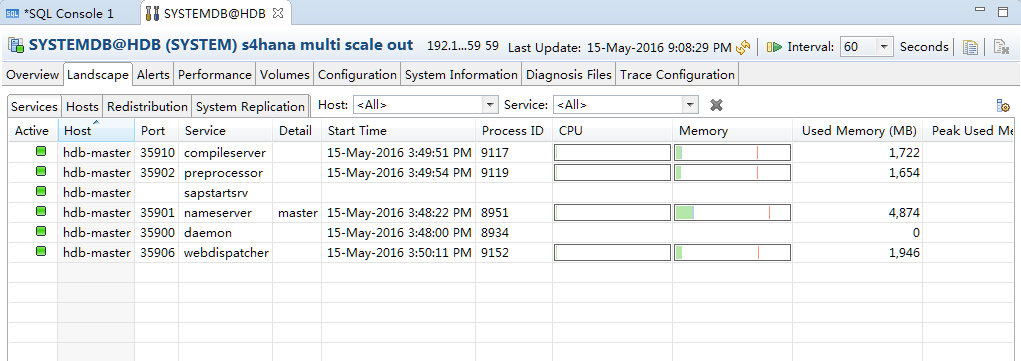

, slave server added successfully! Let's take a look at HANA Studio

, slave server added successfully! Let's take a look at HANA Studio